Among the many types of indexes available in PostgreSQL, one of them is covering index. This type of index might be especially useful when you frequently run a query that contains specific list of selected columns. This type of index includes certain columns that are not part of the WHERE clause but are part of the SELECT clause. This strategy reduces the number of I/O operations during data retrieval.

Applying covering index

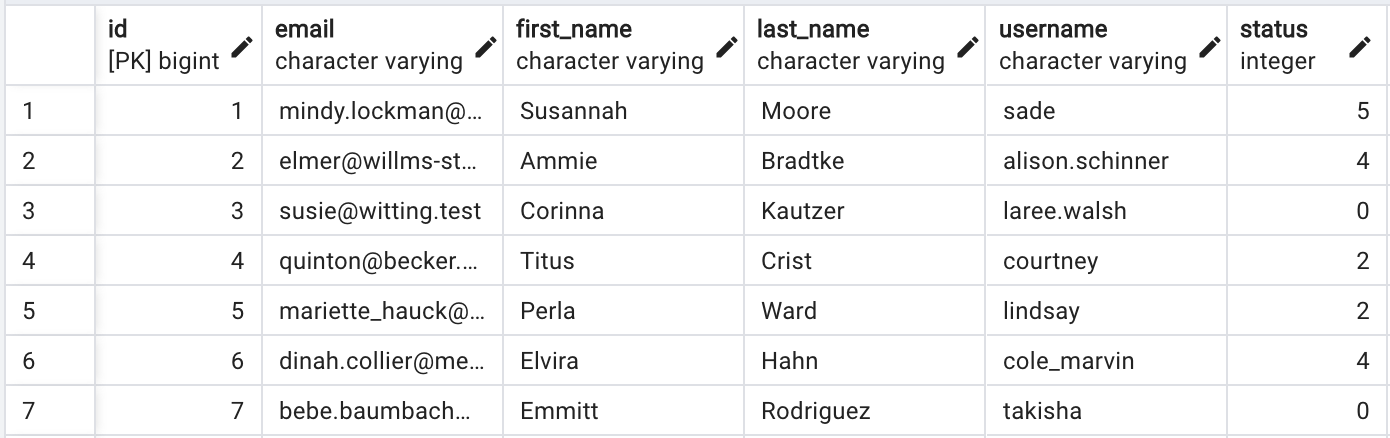

The syntax for applying such an index is quite straightforward. Let's consider a simple example with the users table. Dataset is rather randomized and table contains around 5 milions of records.

Let’s assume that we want to search some users directly by their username - for this solution is simple - we can apply regular B-Tree index on username field:

CREATE INDEX idx_users_on_username ON users (username)

This is a classic index for a common search by specific field and it works pretty fine. So, what is the purpose of creating something like covering indexes?

Covering indexes are beneficial when your application often queries the database to select specific columns. For example, if your query searches by username, but also selects the firstname and lastname columns, which are always rendered in the view. So if your query looks more or less like this

SELECT first_name, last_name, username FROM users WHERE username = ‘kowalsky’

you may bring covering index into play.

Creating such an index relies on adding INCLUDE keyword and apply desirable columns:

CREATE INDEX idx_users_on_username ON users (username) INCLUDE (first_name, last_name)

This is how it looks directly in PostgreSQL. Let’s see how to use covering indexes with Rails app and do some performance tests.

Covering index in Rails

Rails 7.1 introduced covering indexes, providing support for migration methods and schema definition. Migration for such index looks like this:

class AddUsernameIndexOnUsers< ActiveRecord::Migration[7.1]

def change

add_index :users, :username, include: [:first_name, :last_name]

end

end

It is a common method for adding index, the only difference is parameter include which defines additional fields.

Ok, lets do some quick performance tests for similar query I’ve presented in previous section, which in Rails will look like below. You can jump to the summary table at the bottom of this part if you are not interested in the details.

usernames = ['sade', 'alison.schinner', 'lindsay', 'takishka', 'cole_marvin', 'courtney']

User.select(:username, :first_name, :last_name).where(username: usernames)

Additionally, I've created a method that uses a sample amount of runs to analyze the average time of query execution:

def execution_benchmark(num_runs)

total_execution_time = 0

i = 0

num_runs.times do

i = i + 1

usernames = [

'sade', 'alison.schinner', 'lindsay', 'takishka', 'cole_marvin', 'courtney'

]

lower_result = User.select(:username, :first_name, :last_name)

.where(username: usernames)

.explain(:analyze)

lower_execution_time = lower_result.split("\n")[-2]

.match(/Execution Time: (\d+\.\d+) ms/)[1]

.to_f

total_execution_time += lower_execution_time

puts lower_result if i == num_runs

end

average_lower_time = total_execution_time/num_runs

puts average_lower_time

end

Without index

Initially, I will attempt this without any index. I'll run the EXPLAIN ANALYZE command for this query and calculate the average time over 100 runs:

Gather (cost=1000.00..129323.41 rows=19 width=38) (actual time=745.861..1410.638 rows=5 loops=1)

Workers Planned: 2

Workers Launched: 2

-> Parallel Seq Scan on users (cost=0.00..128321.51 rows=8 width=38) (actual time=666.999..1281.547 rows=2 loops=3)

Filter: ((username)::text = ANY ('{sade,alison.schinner,lindsay,takishka,cole_marvin,courtney}'::text[]))

Rows Removed by Filter: 1712342

Planning Time: 0.251 ms

Execution Time: 1412.839 ms

AVERAGE TIME FOR 100 RUNS - 1749.4724100000003 ms

Standard index

Next in order will common index on username field - without includes:

Index Scan using index_users_on_username on users (cost=0.43..102.92 rows=19 width=38) (actual time=0.018..0.042 rows=5 loops=1)

Index Cond: ((username)::text = ANY ('{sade,alison.schinner,lindsay,takishka,cole_marvin,courtney}'::text[]))

Planning Time: 0.065 ms

Execution Time: 0.051 ms

AVERAGE TIME FOR 100 RUNS - 0.04026799999999996 ms

With covering index

And the last one - index on username field with includes for first_name and last_name:

Index Only Scan using index_users_on_username on users (cost=0.56..39.67 rows=19 width=38) (actual time=0.008..0.023 rows=5 loops=1)

Index Cond: (username = ANY ('{sade,alison.schinner,lindsay,takishka,cole_marvin,courtney}'::text[]))

Heap Fetches: 0

Planning Time: 0.042 ms

Execution Time: 0.028 ms

AVERAGE TIME FOR 100 RUNS - 0.043859000000000016 ms

Summary table:

| No index | Standard index | Index with include | |

|---|---|---|---|

| Average time | 1749.4724 ms | 0.04027 ms | 0.04386 ms |

| Cost | 129323.41 | 102.92 | 39.67 |

Explain and analyze

Now let's analyze the results from the performance tests. As expected, the first example without an index resulted in a sequential scan. This led to a high execution time and high cost for the query, making it not noteworthy. What is actually interesting, are results from the next of two:

Average time:

- Common B-Tree index - 0.04026799999999996 ms

- Covering B-Tree index - 0.043859000000000016 ms

Cost:

- Common B-Tree index - 0.43..102.92

- Covering B-Tree index - 0.56..39.67

It's clear that the execution time remains virtually the same, falling within the margin of statistical error. The thing, which we can focus on, is the cost of executing query. In the context of database query optimization, "cost" refers to an estimate of the computational resources required to execute a query. The cost is expressed in an arbitrary unit, including factors such as I/O operations, CPU usage, and memory consumption. Lower costs typically indicate more efficient query execution plans.

So, going back to results, we can see, that the total cost is now 39.67, significantly lower than the 102.92 we incurred when using an unique index without the INCLUDE clause. This cost reduction was achieved because the query only scanned the index to retrieve the data without touching the table. Additional info that table is not accessed comes from heap fetches - it equals 0.

Conclusion

In this article, I've provided a quick introduction on how to use covering indexes and the advantages it offers. Despite its applications being somewhat limited due to its specialization for specific select fields, it can be beneficial in reducing the number of I/O operations, as our data is fetched directly from the index, not the table itself. Thus, utilizing a covering index can slightly reduce the resources used during querying your database, when you select certain columns together.

.jpg)

.jpg)